This matter has to be talked about before the 1960s. . .. . .

At that time, container transportation was not yet recognized, and almost all goods were transported in bulk.

Take the "Warrior" as an example. In a transport from Brooklyn to Bremen, the loading and unloading of goods was done by ordinary dock workers. It was loaded with 5,015 tons of goods, mainly food, daily necessities, mail, and machinery. And vehicle parts and 53 vehicles. The number of this batch of goods reached a staggering 19,482, and they were of different sizes and types.

All the goods are put on the pallets one by one by the dockers, and then the pallets are lowered into the cabin and moved down one by one. They took a total of 6 days to load the ship; the voyage across the Atlantic took 10 and a half days; in Bremerhaven, the dock workers worked around the clock, and they unloaded the ship for 4 days.

All in all, half of the voyage was spent on the pier.

Why have to load, unload, transfer and reload so many bulk cargoes? Why not put the goods in big boxes, and then just load, unload and move these boxes?

The container was born.After a large number of containers are used in the transportation industry, a 35-ton container issued by a coffee manufacturer can leave the factory in Malaysia, be loaded on a freighter, and reach Los Angeles, which is 9,000 battalions away, after a 16-day voyage. One day later, the container was transported to Chicago by a train and was randomly transferred to a truck bound for Cincinnati. From leaving the factory in Malaysia to arriving at the warehouse in Ohio, the trip to the 11,000 battalion may only take 22 days at a speed of 500 miles per day, and the cost is lower than a one-way first-class ticket. In addition, on this road, it is very likely that no one has touched the contents of the container, or even opened it at all. Compared with traditional freighters, the loading and unloading of container ships requires only about 1/6 of the time and 1/3 of labor.

The idea of ​​"container technology" we are going to talk about today comes from this.What problem does the container solve? On a large ship, all types of goods, from milk powder and cosmetics, to machinery and equipment, and sports cars, can be packed into a standard container. The person who consigns the cargo only needs to ensure that the cargo is sealed and fixed in the container, and does not need to care about how the container is placed and transported.

The person in charge of transportation does not need to care about the different goods contained in each container, but only needs to treat the container as a closed and undifferentiated independent entity for loading, unloading, stacking, and transportation. The container remains closed during the entire process until it is transported to the destination. Ground. From ships to trains to trucks, cranes (cranes) can be used to operate containers to automate the process, which effectively solves the problem of long-distance transportation of different types of goods in a very cheap way.

Similar to containers, the birth of container technology has brought a lot of convenience to developers and saved a lot of costs, whether in operation and maintenance or development.

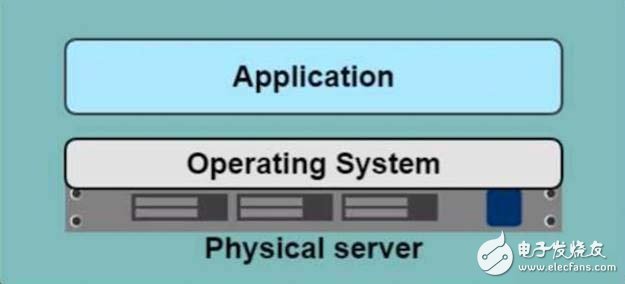

The birth history of container technologyA long time ago, if you want to deploy an application on an online server, you first need to buy a physical server, install an operating system on the server, and then install the various dependent environments required by the application. Finally, you can deploy the application. Only one application can be deployed per server.

This has caused the following obvious problems:

â—Deploying applications is very slow

â—The cost is very high

â—And it is easy to cause a waste of resources, because often an application can not use the resources of a server

â—Difficult to migrate and expand

â—Migration problem: To migrate the application, you have to repeat the process of deploying the application: buy a server-"install os-" configure the environment-"deploy the application

â—Expansion problem: You can only buy new hardware to upgrade the physical server, or buy a server with higher performance, which again involves the problem of migration

â—May be restricted to hardware manufacturers, because there were different hardware platforms at that time

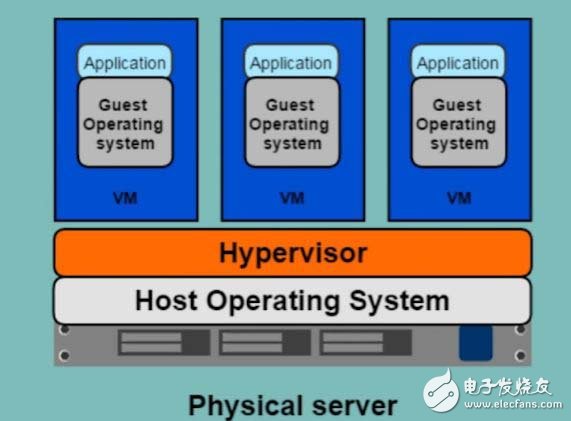

After the emergence of virtualization technology, this problem has changed. The virtualization technology will add an extra layer of Hypervisor on top of the local operating system. Hypervisor is an intermediate software layer that runs between the physical server and the operating system. It can virtualize hardware resources, such as cpu, hard disk, and memory resources. Then we can install the operating system based on the virtualized resources, which is the so-called virtual machine.

Through the Hypervisor layer, we can create different virtual machines, and can limit the physical resources of each virtual machine, and each virtual machine is separate and independent. For example, virtual machine A uses 2 cpu, 8g memory, 100g disk, and virtual machine B uses 4 cpu, 16g memory, 300g disk, and so on. . .. . .In this way, the utilization of physical resources can be maximized.

In this way:

â—One physical machine can deploy multiple applications

â—Each application can run independently in a virtual machine

â—Resource pool-resources of a physical machine are allocated to different virtual machines

â—Easy to expand-add physical machines or virtual machines, because virtual machines can be copied

â—Easy to cloud-Amazon AWS, Alibaba Cloud, Google Cloud, etc.

However, as time goes by, users find that hypervisor is more and more troublesome.

why? Because for the hypervisor environment, each virtual machine needs to run a complete operating system and a large number of applications installed in it. But in the actual production and development environment, we are more concerned about the applications deployed by ourselves. If I have to build a complete operating system and accompanying dependent environment for each deployment and release, then this makes the task and performance very heavy and very low. .

Based on the above situation, people are thinking, is there any other way to make people pay more attention to the application itself, and I can share and reuse the redundant operating system and environment at the bottom? In other words, after I deploy a service and run it, I want to migrate to another place without installing an operating system and dependent environment.

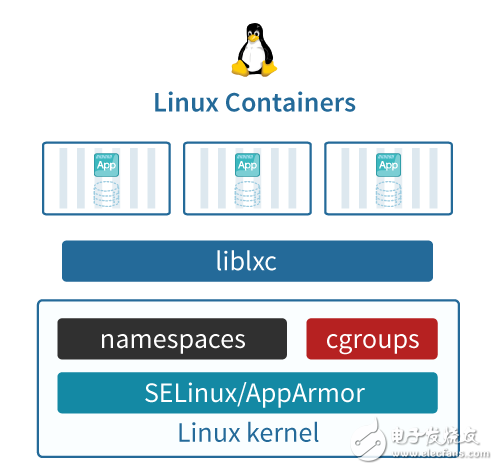

The birth of Linux Container container technology (2008) solved the problem of "container transportation" in the IT world. Linux Container (LXC for short) is a lightweight operating system layer virtualization technology with a kernel.

Linux Container is mainly ensured by the two mechanisms of Namespace and Cgroup.

We mentioned the container just now. Of course, the function of the container is to pack and isolate the goods, so that the goods of company A and company B are not mixed together, otherwise the unloading will be unclear. Namespace also has the same function, which is used for isolation.

Isolation alone is not useful, we also need to manage the resources of the goods. Similarly, the shipping terminal also has such a management mechanism: what kind of size containers are used for the goods, how many containers are used for the goods, which goods are given priority to be transported, how to suspend the transportation service in extreme weather, how to change the route, and so on. . .. . In general, the corresponding Cgroup is responsible for resource management control, such as the limitation of the process group using CPU/MEM, the priority control of the process group, the suspension and recovery of the process group, and so on.

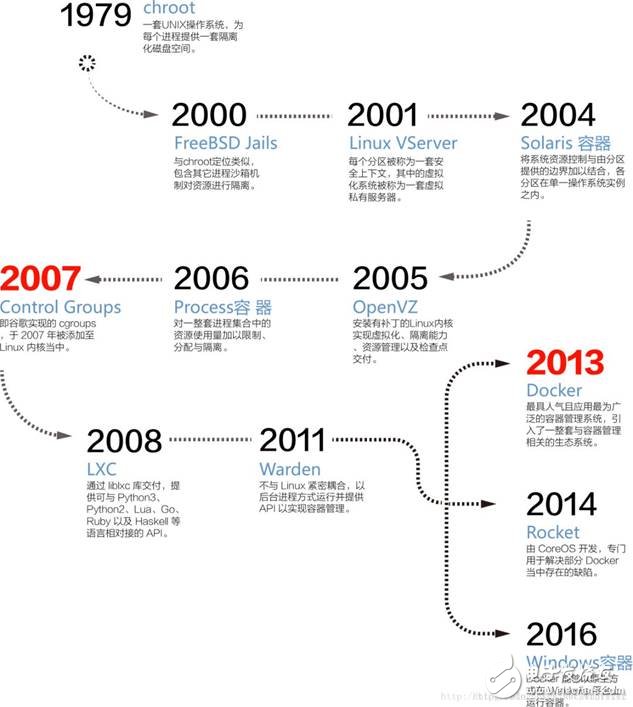

The following figure shows the evolution of container technology. At present, docker is almost synonymous with container.

Having said that, we might as well summarize the characteristics of container technology:

1. Resource independence and isolation

Docker restricts hardware resources and software operating environment through Linux Namespace and Cgroup, and achieves isolation from other applications on the host, so that they do not affect each other. Different applications or services use “containers†as the unit to load or unload “ships†or “shipsâ€. On “container ships†(hosts or clusters running containers), thousands of “containers†are arranged neatly and differently. Companies and different types of "goods" (programs, components, operating environment, and dependencies required to run applications) remain independent.

This happens to be the most basic requirement of a cloud computing platform.

2. Consistency of the environment

After the development engineer completes the application development, build a docker image. The container created based on this image is like a container, which packs various "bulk goods" (programs, components, operating environment, and dependencies required to run the application). No matter where the container is: development environment, test environment, production environment, you can ensure that the type and number of "goods" in the container are exactly the same, the software package will not be missing in the test environment, and the environment variables will not forget to configure in the production environment. The development environment and the production environment will not cause the application to run abnormally because of the installation of different versions of dependencies. This consistency is due to the fact that it has been sealed in the "container" when "shipping" (build docker image), and every link is transporting this complete "container" that does not need to be split and merged.

3. Lightweight

Traditional virtual machines create a virtual system through hardware virtualization. Each virtual machine has its own memory, hard disk, and operating system, and the pre-allocated resources will be completely occupied by the virtual machine. Using virtual machines to isolate applications will cause a relatively large waste of resources. An application plus dependencies are only tens to hundreds of megabytes in size, and the operating system often needs to consume about 10G of capacity.

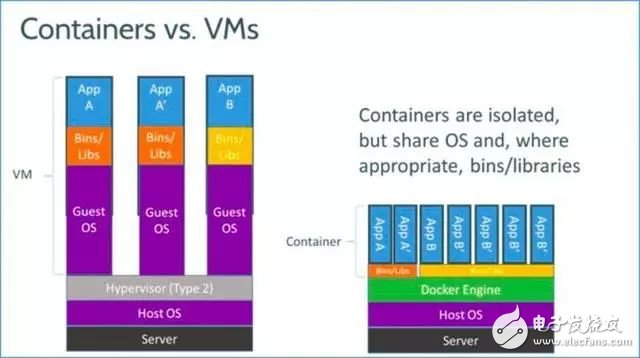

Figure: Comparison of container technology and virtual machines

The figure above shows the difference between container technology and virtual machines. The container contains the application and the required dependencies, but does not require exclusive resources. It does not have a virtual system. Instead, it shares hardware resources and operating systems with the host, and shares the kernel with other containers, thereby realizing dynamic resource allocation. Multiple containers run as independent processes in the user space of the same host operating system. Therefore, containers are much lighter than virtual machines. Nearly a hundred containers can be started on a host at the same time. It is very convenient for an application to scale horizontally, while it is almost impossible to start the same number of virtual machines. For restart operations, the container is similar to restarting a process, and the virtual machine is equivalent to restarting the operating system.

4. Build Once, Run Everywhere

When "cargo" (application) is migrated and exchanged between "cars", "trains", and "ships" (private cloud, public cloud and other services), only the "docker container" that meets the standard specifications and loading and unloading methods needs to be migrated. , Reducing the time-consuming and laborious manual "loading and unloading" (online and offline applications), bringing about huge time and labor cost savings, which will enable only a few operations and maintenance personnel to operate and maintain ultra-large-scale online applications in the future. The cost of container clusters may be.

Seeing this, do you feel very familiar?

In recent years, the Internet of Things platforms that have been popular in recent years are not propagating these points?

Internet of Things platform vendors will often tell you this: We provide a variety of development tools on our platform. You can use the drag-and-drop method to develop programs. You don’t have to worry about the underlying operating system and the environment you rely on. Focus on the customer's needs and the application itself. . .. . .

Technologies such as OpenStack and Cloudstack solve the problems of the IaaS layer. The birth of container technology actually mainly solves the technical realization of the PaaS layer.

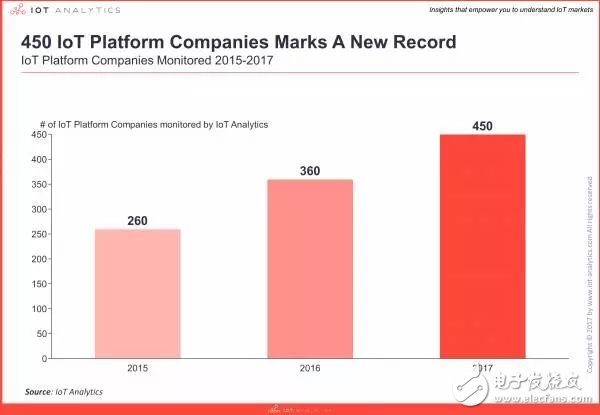

The most commonly used open source cloud platform architectures Kubernetes, Cloud Foundary or Serverless, are driven by container technology. Almost all of the hundreds of IoT platforms on the market are PaaS platforms.

Chart: 2015-2017 Number of IoT Platform Companies

Figure: Division of user management and platform functions in each XaaS

Having said that, everyone may fully understand the importance of container technology.

Container technology has brought disruptive breakthroughs for software development and system operation and maintenance. With the popularization of container technology, the efficiency of system development and management will surely rise to a new level, thereby bringing immeasurable benefits to enterprises.

5000 Puffs Disposable ecig have a completely enclosed design, reducing the need for charging and replacing cartridges. The no-charge design also reduces the occurrence of faults. It is understood that with rechargeable e-cigarettes, each cartridge needs to be charged at least once and the battery efficiency is extremely low, while the design of disposable ecig can solve this problem very well.

5000 Puff E-Cigarette,500 Puff E-Cigarette For Sale,Best 5000 Puff E-Cigarette,Best 5000 Puff E-Cigarette For Sale

Shenzhen E-wisdom Network Technology Co., Ltd. , https://www.healthy-cigarettes.com