With the development of technology, robotic arms are no longer like devices that we used to remember that they can only perform a single task in a factory. Now they have evolved to look more and more like human dexterous hands. Although there is still a big gap between the flesh-and-blood precision manipulators of science fiction, AI laboratories around the world have developed a variety of "hands" today, advancing our imagination of robots. Coupled with the powerful power of algorithms, they can push, pull, pick, and place various objects, and they can also rotate and play in their hands.

Spin toss

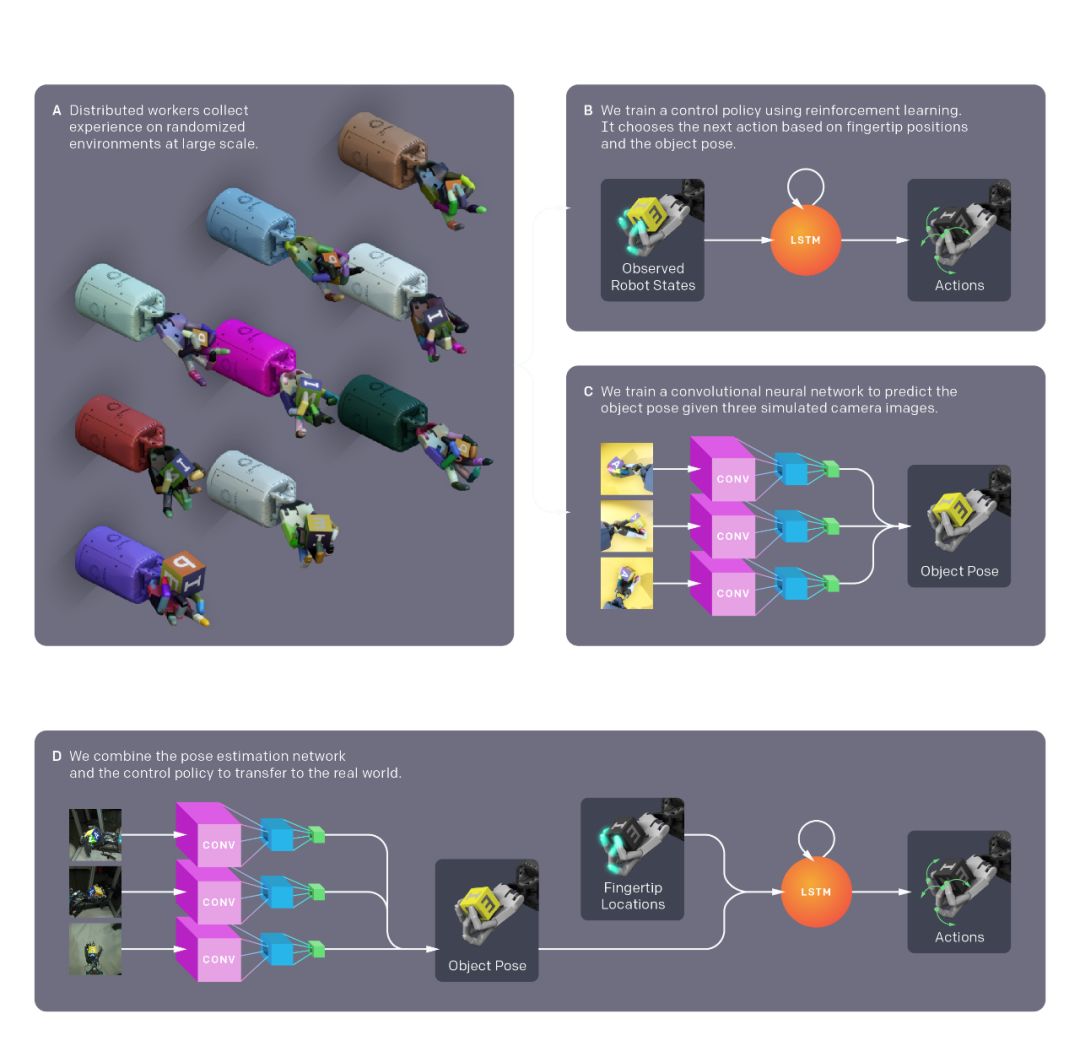

Recently, the most famous robotic hand is OpenAI's Dectyl. Through deep learning algorithm training, it has mastered how to flip the cube in the palm of a complex human action.

Through complex models, Dectyl can use a lot of training to learn how to implement complex operations. A large amount of training is carried out on computer hardware through simulation learning, and then the training results are transferred to the real world to achieve flexible flipping. Researchers believe that this method can train more complex operations for it.

This research represents a huge leap in the current research on robotic hands. But in addition to such a complex and highly bionic manipulator, researchers are still studying how to use simpler "hands" to accomplish some simple tasks.

Jaws

Researchers at the University of Berkeley AutoLab created a gripper using two fingers to grab small tools such as screwdrivers and pliers, and put them in a designated box.

Since the gripper is easier to control than a five-finger manipulator, the software is also much easier than a bionic manipulator. So it is a good crawling tool in many simple situations. It can also handle slightly changed objects. For example, the bottle of salad dressing looks very similar to the long cylindrical shape of a screwdriver, and you can find a suitable point to grab it. But when it encounters some very different shapes (far from the known shapes), such as a bracelet, it is difficult to grasp.

Portal>>The "Holy Grail Problem" of Robot Operation--BinPicking

Pick up

What if you want the robot to grab more objects, even if you have never seen an object before? AutoLab scientists have been working on this research in the past few years and have developed a framework called Dex-Net to help robots recognize and grasp objects of different shapes.

The hardware of the system is very simple, combining two kinds of gripping systems: gripper and suction cup respectively. But what the simple combination brings is that it can grab a very rich object category, from scissors to toy dinosaurs can be easily handled.

This is mainly due to the integration of the current advanced machine learning algorithms in this system. Researchers modeled more than 10,000 objects and identified the best grasping strategies. Then use neural networks to learn, analyze, and process these data, and learn to recognize the best way to grasp objects through images. This makes the robot's grasping methods have a qualitative improvement. Unlike the previous need to program each task method, the robot can now learn the grasping of objects by itself.

Using this system, the robot can achieve grasping in a completely random arrangement. Although this system is not perfect, it can continue to learn and continuously improve its performance at a speed far exceeding the past.

Make the bed

The robot may not be a perfect housekeeper, but in some respects it has shown great results. Researchers have recently developed a robot that can automatically make beds. This system can plan a series of actions by analyzing the current bed condition, so as to realize the tidying of the bed.

Push one push

The robot can also use the gripper to push an object on the plane and predict its final position. This means that the robot can move objects to any position on the desktop like you and me.

This system has learned how to push objects through a large number of videos, and in this way it can learn to deal with uncertain and unexpected movements.

future

The above-mentioned types are all single tasks, and cannot be accurately captured if they exceed a certain scope. But with the development of machine learning in recent years, research in this field is constantly advancing.

Similar to OpenAI, researchers at the University of Washington train robots that look the same as the real situation. This is more difficult than training suckers and grippers. The main reason is that the human-like hand has abundant degrees of freedom and joints, which brings a very diverse movement.

At OpenAI, researchers also use simulated environments to train manipulators. Running a simulation environment on a large number of computer hardware and introducing a series of randomness enables it to master more than 100 years of operating experience in a short period of time.

When the training is completed, this experience can be transferred to the real world. The success of Dactyl validates this method of transfer learning. Makes more complex tasks possible. In the future, these tasks can be input to a computer with powerful computing capabilities to simulate and learn, and then the output can be transferred to the real world. This will unlock more and more new skills for the robot. In addition to manipulators, drones, manufacturing, and even self-driving cars will all benefit from it.

Square Pin Header Connector Series

Square Pin Header Connector Series,Pin Connector,Square Pin Header Connector,Pin Header Connector Series

Dongguan ZhiChuangXing Electronics Co., LTD , https://www.zcxelectronics.com