Real TIme Streaming Protocol (RTSP) is a multimedia streaming protocol used to control sound or video, and allows multiple simultaneous stream demand control. The network communication protocol used for transmission is not within its defined range. The server can choose to use TCP or UDP to transmit streaming content. Its syntax and operation are similar to HTTP 1.1, but it does not emphasize time synchronization, so it can tolerate network delay. The aforementioned multi-stream demand control (MulTIcast) allows for the reduction of network usage on the server side, and thus supports multi-party video conferences.

The embedded video surveillance based on Ethernet is more and more widely used, such as transmitting to the server through the 3G network, and transmitting the video data collected by the mobile robot to the server through the 3G network. Streaming media technology is also becoming popular. It is a good choice to apply the current popular streaming media transmission technology to video surveillance systems. It will not occupy too much network bandwidth and can achieve smooth video surveillance. Based on H264 coding technology and secondary development of Live555, this paper designs an embedded streaming video surveillance system that can capture, encode and transmit citrus growth in real time.

1 Introduction to Network Monitoring Video Technology

RTSP (Real TIme Streaming Protocol), which is a real-time streaming protocol, belongs to the TCP/IP protocol system. As its name suggests, it is a protocol for streaming media transmission control, which can control the suspension and continuation of streaming media during transmission, thereby facilitating users. Implement some basic playback features of the player. It also works in C/S mode (client server) and is widely used in Internet video applications due to its combination of many technical advantages.

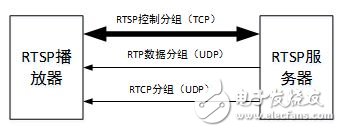

Figure 1 RTSP and RTP, RTCP relationship

The relationship between the RTSP protocol and the RTP (Real Time Protocol) and RTCP (Real Time Control Protocol) is shown in Figure 1. In general, RTSP is only used to transmit control information, which enables the media player to control the streaming media. Transmission, while streaming media is handled by RTP, so the RTSP protocol must be used in conjunction with the RTP/RTCP protocol.

1.2 H.264 technology

H.264 technology is currently the most popular technique used in video coding compression. Because H.264 has a lower code rate, it can achieve higher compression ratio under the premise of the same image quality. Compared with the previous generation MPEG-2, the compression ratio is usually more than twice; H.264 It is more complex in terms of algorithms, so it can provide high quality images compared to previous generation compression techniques. Moreover, its fault tolerance is also very strong, and the network adaptability is very good. In this design, H.264 shows very powerful compression for video that is not very dynamic, which greatly reduces network bandwidth. This design only uses the encoding part of H.264, and applies the most popular X264 project conforming to the H.264 specification as the encoder.

1.3 Live555 open source project

Live555 is an open source project that uses C++ language compilation to provide solutions for standard streaming media transport protocols such as RTSP, RTP/RTCP, and SIP. It supports the current mainstream ts, mpg, mkv, and h264 video formats, and is used by most streaming media servers. The application is a development platform, and various players such as VLC and ffplay can use Live555 as the video data of the streaming media server. Live555 has four basic libraries, BasicUsageEnvironment, UsageEnvironment, GroupSock, and LiveMedia. The first three libraries do not need to be changed in this design. The LiveMedia module needs to be changed. It is the most important module of Live555. The main function of this module is to declare a Medium class. In the Live555 project, many other classes are derived classes.

1.4 YUV image data analysis

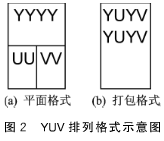

Commonly used YUV element image formats are YUV422 format and YUV420 format. In the YUV422 format, according to the order of U and V in space and time, they can be divided into four different arrangements: YUYV, YVYU, UYVY, and VYUY. At present, the original images output by most USB cameras are in YUYV format. Arranged; according to the arrangement of Y and UV, it can be divided into packing format and flat format. The packing format is usually represented by the English letter planner, while the flat format is represented by interlaced. The packing format is more common, its Y component and UV. The components are placed continuously in memory, as shown in the schematic diagram on the right side of Figure 2, while the planar format Y and UV components are placed separately, as shown in the schematic diagram on the left side of Figure 2.

Figure 2 Schematic diagram of YUV arrangement format

YUV420 is identical in the arrangement to YUV422, but Y:U:V is 4:1:1. Under normal circumstances, the video data of the USB camera captured by V4L2 is YUYV staggered packed YUV422 format, but the H264 encoding library requires the input video format to be YUV420P, which is the 4:1:1 plane format, so it has to be converted. This design uses the mature libswscale library to convert raw image data.

2 Streaming Media Server Implementation

2.1 The overall structure of the system

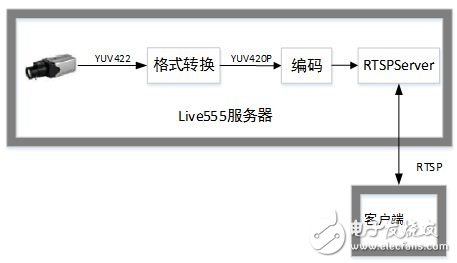

This design refers to the current popular video surveillance system, based on the Linux platform, using the V4L2 (Video for Linux Two) function interface to collect the data transmitted by the USB camera, because the current general USB camera only supports YUYV array YUV422 format data acquisition. Therefore, it is necessary to convert the collected data format to YUV420P, then use x264 to encode each frame of YUV data, and finally redevelop the Live555 open source project to build a streaming media server. The overall block diagram of the design system is shown in Figure 3.

Figure 3 system architecture diagram

In this design, the system platform is Linux, the model of the USB camera is Huanyufei v8, and only supports YUYV array image data of YUYV format. x264 currently only supports image data input of YUV420P, so in order to ensure stability and reliability, FFmpeg engineering is adopted. The libswscale in it converts the data format, and finally uses the constructed RTSP server to transmit and transmit the encoded data in real time.

2.2 Building a Streaming Media Server

For different streaming media sources, the data arrangement format is different, so the method of obtaining SDP is different. On the basis of constructing a new streaming media source, it is necessary to perform secondary development on the SDP acquisition part of the code. Therefore, the work of this design is divided into the following two parts.

2.2.1 SDP acquisition code secondary development

When the RTSPServer receives a DESCRIBE request for a certain media, it finds the corresponding ServerMediaSession and calls ServerMediaSession::generateSDPDescription(). generateSDPDescription() will traverse all calls to ServerMediaSubsession in ServerMediaSession, get the sdp of each Subsession through subsession-"sdpLines(), and merge them into a complete SDP. The way to obtain SDP information is different for different streaming media formats. Therefore, this design builds a new session class WebcamOndemandMediaSubsession based on the OnDemandServerMediaSubsession class.

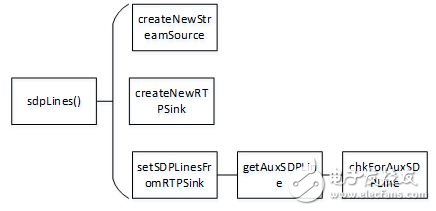

Figure 4 Get the SDP function call relationship diagram

The function call relationship for obtaining SDP information is shown in Figure 4. The sdpLines() function has been implemented in the OnDemandServerMediaSubsession class. In order to obtain SDP information smoothly, we need to modify certain functions called by the sdpLines() runtime. You need to reload several virtual functions in the OnDemandServerMediaSubsession class, as follows:

A. For the createNewStreamSource function, because this design handles the source of the H264 format, the function should include the H264VideoStreamFramer::createNew() statement.

B. For the createNewRTPSink function, for the same reason, the H264VideoRTPSink::createNew() statement should be added to the function.

C, setSDPLinesFromRTPSink obtains the sdp of Subsession and saves it to fSDPLines. The focus of this function is to call getAuxSDPLine, so you need to reconstruct the getAuxSDPLine function. The chkForAuxSDPLine called by the getAuxSDPLine function also needs to be overloaded. For H.264 files, PPS/SPS cannot be obtained from rtpSink, so you must call the startPlaying function to play the video stream, and then close it after playing. This can be used to call the print function when the connection is established. Learn more clearly. In the pseudo code below, you can see that the chkForAuxSDPLine function is called later. This is to ensure that the AuxSDP can be correctly obtained before the function exits. Therefore, in the chkForAuxSDPLine function, you need to loop to check whether AuxSDP is obtained. The pseudo code is as follows.

Rtpsink = sink;

Rtpsink-"startPlaying(*source, 0, 0);// Start playing

chkForAuxSDPLine(this); // loop check

Sdp_line = strdup(rtpsink-"auxSDPLine());//Save

Mp_dummy_rtpsink-"stopPlaying(); // Stop playing

Return mp_sdp_line; // return value

2.2.2 Building the WebcamFrameSource class

WebcamFrameSource belongs to a source class, which is a class that provides a video source. Streaming media development mostly has similar features. Live555 also inherits the spirit of source and sink. In short, Source is the object that generates data, and Sink node is the object that the data flows to. The data of Sink must be read by source. . Although the Live555 project has implemented a lot of sources, it does not have the function of realizing the data coming from the live camera. Therefore, one of the main tasks of the secondary development is to build a new source, which should have the packaged data of H264. Stored in memory and can be transferred to H264VideoRTPSink. In this design, the WebcamFrameSource class is built based on FramedSource.

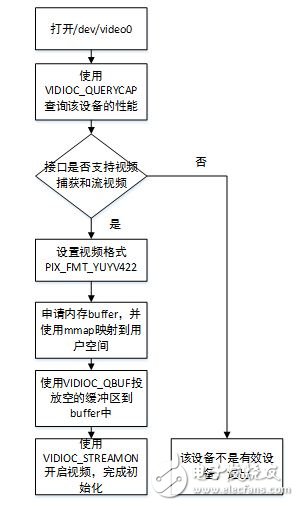

For the WebcamFrameSource class, the main development work is to configure the V4L2 capture camera data in its constructor, x264 encoding optimization settings, as a source class, each time the data is taken from the source, the doGetNextFrame function is called, the function is used as the FramedSource class. A virtual function in , can be overloaded in the newly constructed WebcamFrameSource class. The initialization process for the V4L2 device is shown in Figure 5.

Figure 5 V4L2 initialization settings

The initial configuration pseudo code for the X264 video encoding is shown below.

Ctx-"force_keyframe = 0; // Do not use mandatory keyframes

X264_param_default_preset(ctx-"param, fast, zerolatency); //default zero delay

Ctx-"param.b_repeat_headers = 1;

Ctx-"param.b_cabac = 1; //support cabac

Ctx-"param.i_threads = 1; //If the buffer data is taken out, no deadlock will occur.

Ctx-"param.i_fps_num = 30; // frame rate is 30

Ctx-"param.rc.i_bitrate = 150; // default bitrate

Ctx-"x264 = x264_encoder_open(ctx-"param); // Open the code with the set parameters

X264_picture_init(ctx-"picture); // Output image initialization

In addition, the libswscale needs to be initialized. In this design, libswscale is mainly used to convert the yuv422 format data of the V4L2 output to YUV420, which mainly configures the image height, width, input and output format, and the main functions involved. It is sws_getContext and avpicture_alloc, which will not be described in detail here.

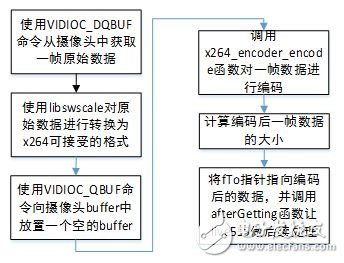

When you fetch data from the WebcamFrameSource streaming source, the doGetNextFrame function is called. This function is a virtual function defined in the FramedSource class, so it needs to be overloaded when building the WebcamFrameSource class. The flow of sending one frame of data from the streaming server is shown in Figure 6.

Figure 6 data transmission flow chart

According to the flow chart, a new streaming media source is constructed, and the interval delay time of reading two frames according to the frame rate setting can meet the real-time requirement.

3 system implementation

3.1 Software and hardware environment

The server hardware environment is TI's DM3730 processor, 256MB running memory, the processor is based on Cortex-A8 architecture, the main frequency is 1GHz, and adopts the more common USB camera of the Universal Flying V8. On the software side, it is based on the Linux 2.3.32 kernel and loads the Angstrom file system.

The client is a normal PC, running Ubuntu 10.04, and the client connection program uses ffplay in the FFmpeg project. The player can support RTSP on demand.

3.2 Transfer points

The library files that need to be compiled in this design are x264, and libswscale. V4L2 is already included in the Linux kernel driver. x264 can be downloaded to a separate project, and libswscale is included in the FFmpeg project.

For the compilation of the x264 project, the design downloads the source code of the version number 20140104-2245 directly from the x264 official website, and uses the following command configuration.

. /configure --prefix=/home/x264 --enable-shared --enable-static --enable-debug --cross-prefix=arm-none-linux-gnueabi- --host=arm-linux

The meaning of the command is roughly that the installation directory is /home/x264, compile static and dynamic library files at the same time, enable debugging, and set up the cross-compilation toolchain. After configuration, use make; make install to get the compiled target board file. Then copy x264.h and x264_config.h to the target board's /usr/include directory and copy the library files to the /usr/lib directory.

To get libswscale, you need to compile FFmpeg first. The FFmpeg version number used in this design is 0.8.15. The command to configure FFmpeg is as follows.

. /configure --prefix=/home/FFmpeginstall/ --enable-shared --target-os=linux --enable-cross-compile --cross-prefix=arm-none-linux-gnueabi- --arch=arm

After configuration, use make; make install command to copy the libswscale directory in the installation directory to the /usr/include directory of the target board, and libswscale.a and libswscale.so in the lib directory to the /usr/lib directory.

Then use the cross-compilation tool to compile the library program executable by the target board using the compiled library files. After compiling, copy the executable program to the /opt directory of the target board.

3.3 Optimization

Since the operating environment is an embedded system with limited resources and the real-time nature of streaming playback, some adjustments are needed. In this design, the program running load is mainly concentrated in the encoding part. In order to reduce the coding burden, libswscale is used to convert a video stream with a resolution of 640x480 into a video stream of 320x240 size. During the video transmission process, the frame rate is lowered and finally determined to be a frame rate of 15 frames. To ensure real-time performance, the x264 encoding parameters are preset to be fast and zero-delay.

3.4 System test run

After the server is turned on, the client PC connects to the target board through Ethernet, sets the client PC network card address, and ensures that the client sends ffplay RTSP://192.168.71.128:9554/webcam parameters on the same IP network segment. .

Conclusion

In the context of the current application of RTSP technology, this paper builds an RTSP streaming media server that combines real-time video capture, encoding and embedded technology, and uses the commonly used RTSP technology to support RTSP technology as a client player. The design of the video surveillance system, the whole system is low in cost, stable and reliable, and the load can basically meet the requirements of small and medium-sized applications, and has certain reference value.

Stainless Steel Pull Stick,Ss Pull Stick Bar,Aisi 304 Ss Pull Stick Bar,Stainless Steel Hex Bar

ShenZhen Haofa Metal Precision Parts Technology Co., Ltd. , https://www.haofametal.com